Transforming Data Challenges into Success Stories: Meet Fission Labs' Data Engineers

In today's data-driven world, the role of data engineers in the success of your projects cannot be overstated. Fission Labs' team of data engineers stands as the ideal choice to propel your data-centric endeavors to new heights of achievement. With an extensive skill set and profound expertise in various data domains, our data engineers are poised to make your projects a resounding success. This comprehensive article will explore what sets Fission Labs' data engineers apart and why they are the perfect choice for your data-driven projects.

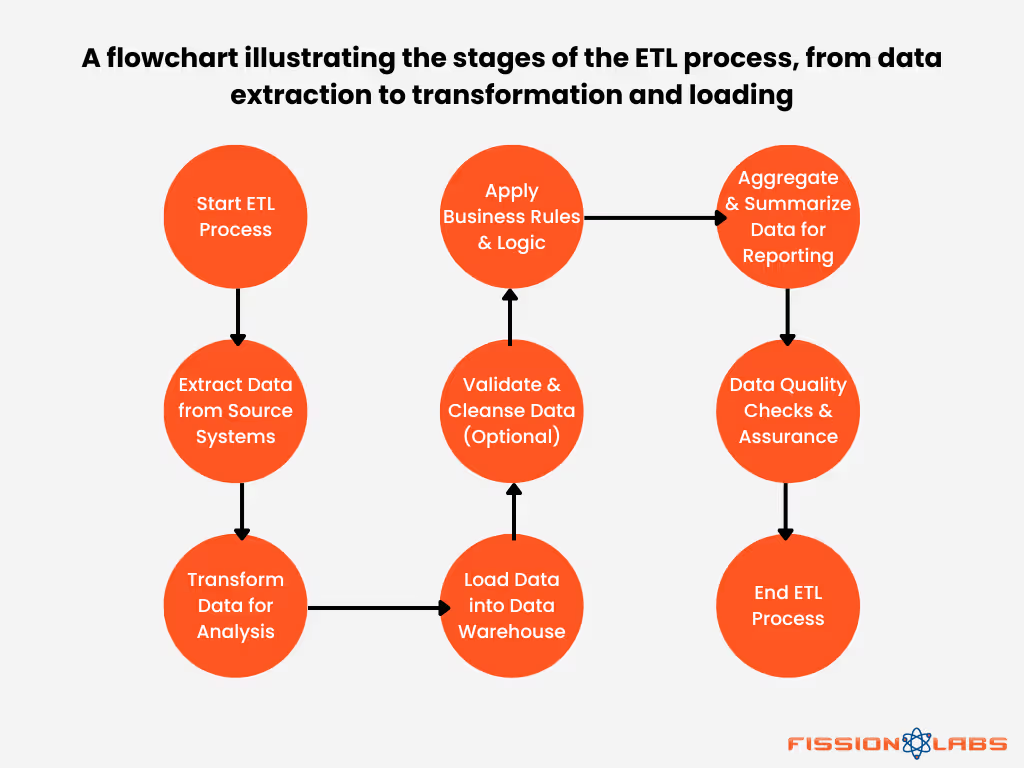

Mastering the ETL Craft

Extract, Transform, Load (ETL) Mastery

Our data engineers are not just proficient but masters of ETL processes. ETL serves as the backbone of efficient data processing, and our engineers ensure that data flows seamlessly, from extraction to transformation and loading. This expertise guarantees that your data is not just managed but transformed into a valuable asset for your projects.

Seamless Data Flow

What truly sets our data engineers apart is their ability to ensure the seamless flow of data. They design and implement ETL pipelines meticulously, maintaining data integrity and minimizing disruptions. This means your data is always available when needed, and any transformations or modifications are executed flawlessly.

Custom ETL Solutions

In the dynamic data landscape, one-size-fits-all solutions often fall short. Our data engineers excel in crafting custom ETL solutions tailored to your project's unique requirements. This flexibility ensures that your data processing needs are met with precision, whether you're dealing with structured, semi-structured, or unstructured data.

It begins by acquiring diverse datasets from various sources. Following this, we meticulously process and normalize the data, creating a single source of truth. The normalized data is then seamlessly integrated into our data warehousing system, providing a structured foundation for efficient retrieval. This sets the stage for comprehensive reporting and analysis, empowering stakeholders with actionable insights for informed decision-making. Our streamlined approach ensures data integrity and positions us at the forefront of data-driven excellence.

Proficiency in SQL and Python

SQL: The Query Language

SQL (Structured Query Language) is the bedrock of data manipulation and analysis. Our data engineers are not just knowledgeable but masters of this language. SQL is not just about writing queries; it's about optimizing them for performance, understanding database structures, and ensuring data consistency. With their SQL proficiency, our engineers ensure that your data is not just stored but leveraged for meaningful insights.

Python: The Swiss Army Knife of Data

Python is a versatile and widely used programming language for data analysis and manipulation. Our data engineers are not just proficient but adept at using it to create custom data solutions. Whether it's data cleansing, transformation, or even machine learning, Python is their tool of choice. This versatility ensures that your data projects are executed with precision and adaptability.

Combining SQL and Python

What sets our data engineers apart is their ability to combine SQL and Python for comprehensive data solutions. SQL is perfect for querying structured data, while Python excels at handling semi-structured and unstructured data. Our engineers understand the nuances of each language and use them synergistically to solve complex data challenges. This combination provides you with a holistic approach to data management and analysis.

Big Data Handling Expertise

The Challenge of Big Data

In today's data landscape, the volume, velocity, and variety of data are growing exponentially. This is where our data engineers truly shine. They are well-versed in managing vast volumes of data, making them the ideal partners for projects that involve massive datasets. Whether it's dealing with terabytes or petabytes of data, our engineers have the expertise to ensure that your big data projects run smoothly.

Efficient Data Storage and Retrieval

Handling big data isn't just about storing it; it's about retrieving and processing it efficiently. Our data engineers understand the intricacies of data storage systems, both traditional and distributed. They can design and implement data storage solutions that optimize data retrieval, ensuring that your project's performance remains at its peak.

Scaling for Future Growth

The data landscape is evolving rapidly. What's considered big data today might become normal data tomorrow. Our data engineers are forward-thinking, and they design solutions that can scale with your project's growth. This scalability ensures that your big data projects are not just for today but are future-proofed for tomorrow's challenges.

Hadoop and Spark Champions

Hadoop: Tackling Big Data

Hadoop has become synonymous with big data processing. Our data engineers are champions in working with Hadoop, an open-source framework for the distributed storage and processing of vast datasets. Hadoop is known for its fault tolerance and scalability, and our engineers leverage these features to handle large-scale data efficiently.

Apache Spark: Lightning-Fast Data Processing

When it comes to real-time data processing, Apache Spark is the go-to tool. Our data engineers are experts in utilizing Spark to process data at lightning speed, making it the ideal choice for projects that require real-time or near-real-time data analysis. Spark's in-memory processing capabilities are harnessed to deliver high-performance results.

Customized Data Processing Solutions

What sets our data engineers apart is their ability to craft customized solutions using Hadoop and Spark. They don't just rely on out-of-the-box configurations; they tailor these technologies to your project's specific requirements. This customization ensures that you get the most efficient data processing solutions, whether it's for batch processing or real-time analytics.

Cloud Data Pipelines

The Shift to Cloud Data Processing

The cloud has revolutionized data processing and storage. Our data engineers excel in creating efficient data pipelines on leading cloud platforms such as AWS, Azure, and Google Cloud. The cloud provides the flexibility and scalability needed for modern data projects. Our engineers understand the intricacies of cloud-based data solutions, ensuring that your data is not just processed but also stored securely in the cloud, ready for on-demand access.

Scaling on Demand

One of the key advantages of cloud data processing is the ability to scale on demand. Whether you have a sudden spike in data or need to downscale during lean periods, cloud-based solutions provide the flexibility to adapt. Our data engineers leverage this flexibility to ensure that your data pipelines can scale to your project's needs, optimizing costs and performance.

Cost-Effective Data Solutions

The cloud offers cost-effective data solutions, and our engineers understand how to make the most of this advantage. They design data pipelines that maximize cost-efficiency without compromising on performance. This ensures that your project's budget remains in check while still delivering high-quality results.

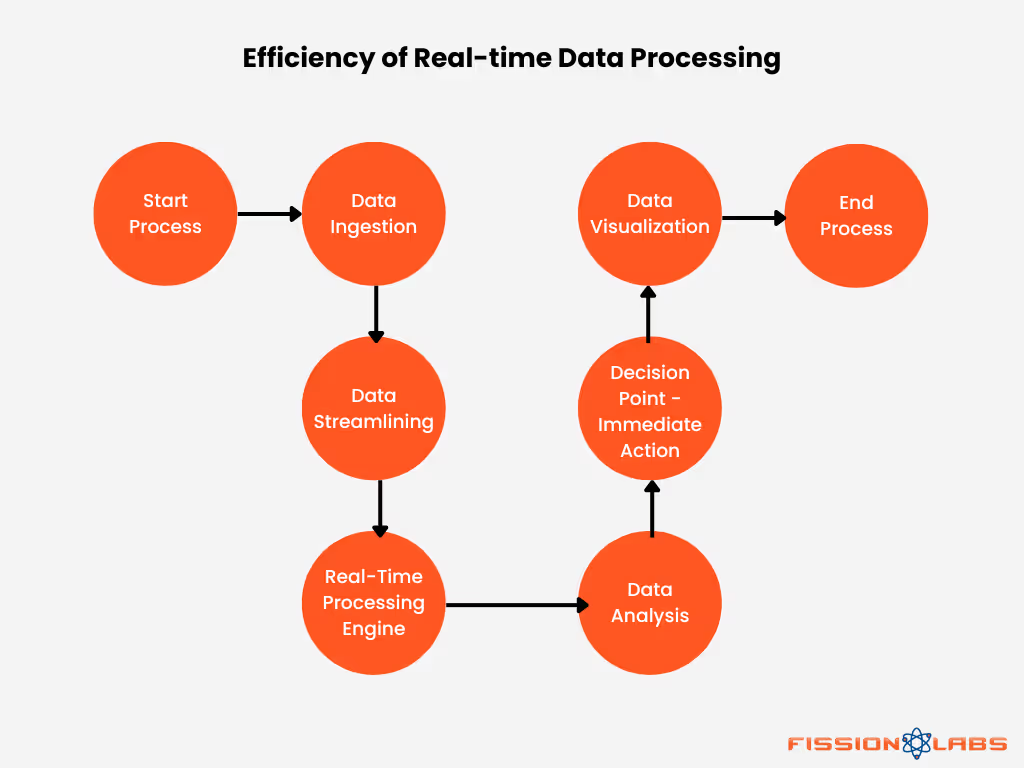

Real-Time Data Processing Masters

The Need for Real-Time Insights

In a rapidly changing world, having real-time insights is often the key to successful decision-making. Our data engineers are masters at real-time data processing, ensuring that your project remains synchronized with the latest information. Whether it's monitoring user activity, stock prices, or IoT data, our engineers have the skills to make real-time data processing a reality.

Streamlining Data Flows

Real-time data processing is not just about speed; it's about ensuring that data flows seamlessly and is processed without delays. Our engineers are experts at streamlining data flows to ensure that data is processed in real-time. This guarantees that you're always working with the most up-to-date information.

Immediate and Informed Decisions

With real-time data processing, you can make immediate and informed decisions. Whether it's responding to user behavior, market changes, or operational data, our data engineers ensure that your project can act on data as it arrives, providing a competitive edge.

Data Warehousing Excellence

Structured and Accessible Data

For data to be valuable, it needs to be organized and easily accessible. Fission Labs' data engineers excel in designing and maintaining data warehouses. They ensure that your data is structured efficiently, and ready for reporting and analysis. Data warehousing is the foundation for effective data analysis, and our engineers make sure that your data is in the best possible shape.

Data Organization and Integrity

Data warehousing involves more than just storage; it's about maintaining data integrity and consistency. Our data engineers understand the importance of data organization and work meticulously to ensure that your data remains accurate and trustworthy. This level of data integrity is what sets your project up for reliable and insightful analysis.

Performance-Optimized Data Warehouses

Data warehouses need to be not just organized but also optimized for performance. Our engineers design data warehouses that ensure data retrieval is efficient, and reporting and analysis are fast. This means that you get the insights you need without long wait times, ensuring that your project remains agile and responsive.

Data Integration Virtuosos

The Data Integration Challenge

In today's diverse data ecosystem, data integration is a critical challenge. Data comes from multiple sources, in various formats, and often needs to be harmonized for meaningful analysis. Fission Labs' data engineers are virtuosos when it comes to seamlessly integrating data from diverse sources. They ensure that your analytics are comprehensive and cohesive, regardless of the data's origins.

Unified Data for Better Analysis

Data integration is about unifying data from different sources to provide a holistic view. Our data engineers ensure that your data can be combined and analyzed cohesively. This means you get a unified view of your data landscape, enabling you to derive insights that might be missed when data is siloed.

Custom Integration Solutions

Off-the-shelf integration solutions don't always fit the bill. Our data engineers craft custom integration solutions tailored to your project's unique requirements. This customization ensures that your data is integrated with precision, and your analytics are based on a complete and accurate dataset.

Custom Solution Accelerators

Data Connectors

In the world of data engineering, the first big task is gathering information from various places – databases, web sources, files, and more. No matter where the data is, making sure we can smoothly connect and pull it out is key to making data projects work.

Our data engineers have skillfully crafted connectors – like superhighways for data – designed to effortlessly simplify and enhance the data process, making it both easy and efficient.

Our connectors act as bridges, linking up with various data sources such as databases, file systems, APIs, and more. The magic happens behind the scenes through the power of Python and the efficiency of PySpark. These tools work together seamlessly to establish connections, and retrieve and organize data, making the entire process smooth and efficient.

Picture it like this: when you want to access information from databases or files or pull data from APIs, our connectors, powered by the simplicity of Python and the prowess of PySpark, create a direct and efficient route. It's like having a reliable guide that effortlessly navigates through different data landscapes, ensuring you can access what you need without any hassle.

So, while we won't delve into the technicalities, just know that our connectors are here to make your data journey easy, efficient, and hassle-free. They're the unsung heroes working behind the scenes, ensuring your data flows seamlessly, and allowing you to focus on what matters – deriving valuable insights.

Time-Efficient Data Transformations with DBT

DBT stands at the heart of efficient data management, turning challenges into triumphs. It's more than just a tool; it represents a whole new approach to data transformation and modeling.

DBT captivates data engineers with its modular design and version control, streamlining the development cycle. Using SQL-powered transformations, promotes team collaboration, while built-in data lineage tracking ensures transparent and reliable data transformations. DBT's features create a seamless environment, emphasizing consistency and reliability in the transformation journey.

Integrating DBT boosts our efficiency, enhancing precision and reliability in data transformations. As we push the boundaries of data engineering, DBT stands as a key tool in our pursuit of excellence, underscoring our commitment to delivering unmatched value to clients.

Python Web Scraping Boilerplate

Web scraping, the process of extracting data from websites, is simplified through our Python-based template. Leveraging Python libraries, we created a streamlined framework, offering pre-built functions for efficient and adaptable data extraction, empowering clients with scalable solutions for diverse website structures.

Implementing our Python-based web scraping template as a solution accelerator yielded significant improvements. Clients experienced streamlined data extraction processes, reduced development timelines, and increased adaptability to various websites. The template's efficiency not only enhanced productivity but also provided a scalable solution for evolving data extraction requirements.

By streamlining data extraction processes, it enhances operational efficiency and productivity. The template's adaptability to diverse website structures and scalability ensures that clients have a reliable and efficient solution that can evolve with their changing data extraction needs. This not only reduces development timelines but also empowers clients to focus on deriving actionable insights from the extracted data, ultimately contributing to informed decision-making and strategic planning.

Championing Data Modeling Best Practices

The Art and Science of Data Modeling

Data modeling is both an art and a science. It's about creating a framework that represents data and its relationships accurately. Our data engineers are champions of data modeling best practices. They employ industry standards and their expertise to create effective data models that drive insightful analysis and decision-making.

Ensuring Data Consistency

Data modeling is crucial for ensuring data consistency. Inconsistent data can lead to unreliable analysis and decisions. Our engineers work diligently to create data models that maintain data integrity and consistency, ensuring that your project is built on a solid foundation.

Adapting to Project Needs

Every project is unique, and data modeling needs to adapt to those unique requirements. Our data engineers are skilled at tailoring data modeling to your project's needs. Whether it's a complex database structure, a data warehouse schema, or a machine learning model, they create data models that align with your project's objectives and complexities.

Data Governance: Ensuring Data Quality and Compliance

Data Quality Assurance

Rigorous measures are in place to ensure the accuracy, completeness, and consistency of your data. Our data engineers implement robust validation processes, minimizing errors and enhancing the reliability of your data assets.

Compliance and Security

Fission Labs prioritizes data security and compliance with industry regulations. Our data engineers adhere to the best practices and standards to safeguard your data, ensuring that it meets regulatory requirements and industry standards.

Metadata Management

Comprehensive metadata management is integral to our data governance strategy. By meticulously documenting data lineage, definitions, and relationships, we empower your team with the information needed to make informed decisions and maintain data integrity.

Collaboration with AI/ML Teams: Orchestrating Holistic Solutions

Data Preparation for AI/ML

Our data engineers play a pivotal role in preparing and shaping data for AI/ML initiatives. They understand the unique requirements of machine learning models, ensuring that the data fed into these models is of the highest quality and relevance.

Feature Engineering

Working hand-in-hand with AI/ML teams, our data engineers contribute to feature engineering, identifying and creating the most impactful features for model training. This collaborative effort ensures that the models are well-informed and capable of extracting meaningful insights.

Scalable Data Infrastructure

As AI/ML projects often deal with large volumes of data, our engineers design and implement scalable data infrastructure to support these initiatives. Whether it's optimizing data storage or ensuring efficient data retrieval, our solutions are tailored to meet the demands of advanced analytics.

Real-Time Data Integration

For real-time AI/ML applications, our data engineers excel in creating seamless data integration pipelines. This enables AI/ML models to operate on the most up-to-date information, enhancing the accuracy and relevance of the insights generated.

Continuous Iteration and Improvement

Collaboration doesn't end with deployment. Fission Labs fosters a culture of continuous improvement, where our data engineers work collaboratively with AI/ML teams to iterate on models, refine algorithms, and adapt data strategies based on real-world performance.

Certified Data Engineers on Databricks, Cloudera, and Snowflake

Databricks Certified Data Engineers

Databricks is a leading platform for data engineering and data science. Our data engineers hold Databricks certifications, signifying their proficiency in using this platform to streamline data processing, analytics, and machine learning. Databricks is known for its capabilities in simplifying data processing, and our engineers leverage these capabilities to optimize your data pipelines and analytics processes.

Cloudera Certified Data Engineers

Cloudera is a recognized leader in the field of big data and data engineering. Fission Labs' data engineers are Cloudera Certified Data Engineers, showcasing their mastery in working with Cloudera's distribution of Hadoop and its ecosystem. This certification ensures that they can handle complex data processing tasks with precision and efficiency, making them the perfect choice for projects involving large-scale data.

Snowflake Certified Data Engineers

Snowflake is a cloud data warehousing platform that is gaining prominence in the industry. Our data engineers are Snowflake Certified, demonstrating their competence in designing, building, and maintaining data warehouses on this platform. This certification assures that your data will be efficiently stored and readily accessible for analysis, whether it's on-premises or in the cloud.

Case Study - Our Success Stories Speak for Themselves

The Challenge

Our client approached Fission Labs with a vision to upgrade its Enterprise HRMS and build a Quantum Labour Analysis platform to reskill the planet bridging the gap between supply and demand concerning jobs, skills, and profiles. The client wanted a platform that could handle vast amounts of data from various sources, including employee information, project details, skill sets, and more. The primary challenge was to create a reliable and scalable platform capable of handling a large volume of data while ensuring quick and efficient analysis.

The Solution Delivered

Fission Labs' engineering team decided to use Databricks, a cloud-based data processing platform that enables data engineering, machine learning, and analytics at scale. Databricks' advanced analytics capabilities, combined with its ability to handle big data, made it the perfect choice for the HRMS & Labour Analysis Platform. The team also utilized other cutting-edge cloud-based technology such as AWS and Azure for data storage, processing, and analysis.

Data Integration and Cleansing

The first step in building the HRMS & Labour Analysis Platform was to integrate and cleanse the data. The engineering team used a variety of tools to extract, transform, and load the data from different sources into Databricks. They also applied various data cleansing techniques to ensure that the data was accurate and consistent.

Data Modeling

Once the data was integrated and cleansed, the team began data modeling. They designed a database schema that could handle the large volume of data and support quick and efficient analysis. The team used Databricks' Delta Lake, a versioned data lake that integrates with Apache Spark, to store the data in a scalable and reliable way. Delta Lake provided the team with the ability to perform ACID transactions on large datasets, ensuring data consistency.

Machine Learning and AI

The HRMS & Labour Analysis Platform utilized machine learning and AI to automate the process of identifying critical and emerging skills. The platform analyzed employee profiles and project details to identify skill gaps and training needs. The team utilized Databricks' MLflow, an open-source platform for managing and deploying machine learning models, to develop and deploy machine learning models in a scalable and reliable way.

The team used various machine learning algorithms, such as clustering and regression, to analyze the data and identify patterns. They also used natural language processing (NLP) techniques to extract and analyze data from unstructured sources such as job descriptions and performance reviews. The team used Azure Cognitive Services to perform sentiment analysis on text data and extract keywords.

Real-time Analysis

The HRMS & Labour Analysis Platform provided real-time analysis, allowing subscribers to identify emerging and critical skills as they happen. The team utilized Databricks' streaming capabilities, which integrate with Apache Spark, to enable real-time data processing and analysis. The team used Azure Event Hubs to ingest and process data in real-time.

The platform also utilized Power BI, a business intelligence tool, to visualize the data and provide insights to subscribers. Power BI provided real-time dashboards and reports that enabled subscribers to monitor key metrics such as workforce productivity, training needs, and skill gaps.

The Result

Our designed & delivered HRMS & Labour Analysis Platform has become a highly valuable tool for businesses and HR professionals, enabling them to identify emerging and critical skills in real time. With this platform, subscribers can easily deconstruct employee profiles, understand their current skill sets, and identify areas for development. This has resulted in more effective talent management and employee development programs, enabling businesses to stay ahead of the competition in today's fast-paced and rapidly evolving job market.

Unlock Your Data's Potential

The path to data-driven success starts with making the right choice. Fission Labs' data engineers offer not just expertise but a commitment to excellence. Your projects deserve nothing less.

By choosing Fission Labs as your data engineering partner, you're choosing:

Masterful ETL Processes

- Custom ETL solutions for your unique project needs.

- Seamless data flow, ensuring data availability and integrity.

Proficiency in SQL and Python

- Mastery of SQL for powerful data querying and optimization.

- Adept use of Python for versatile data solutions.

Big Data Handling Expertise

- Efficient data storage and retrieval for large-scale data.

- Scalable solutions for future data growth.

Hadoop and Spark Champions

- Customized data processing solutions.

- Efficient handling of big data and real-time data processing.

Cloud Data Pipelines

- Scalable and cost-effective data solutions in the cloud.

- Expertise in leading cloud platforms.

Real-Time Data Processing Masters

- Immediate and informed decision-making.

- Streamlined real-time data flows for timely insights.

Data Warehousing Excellence

- Organized and performance-optimized data warehouses.

- Data organization and integrity for reliable analysis.

Data Integration Virtuosos

- Unified data for comprehensive analysis.

- Custom integration solutions for diverse data sources.

Championing Data Modeling Best Practices

- Effective data models for insightful analysis.

- Adaptable modeling to project needs.

Certified Data Engineers on Databricks, Cloudera, and Snowflake

- Expertise validated by industry-leading certifications.

- Optimized data processing, analytics, and warehousing.

The journey to unlocking your data's potential starts here.

Contact Us today, and take the first step toward making your data-driven future a reality. Your projects deserve the best, and Fission Labs' data engineers are here to deliver excellence. Don't miss the opportunity to leverage our expertise—your data's full potential is just a click away.